In the previous article (Kubernetes for Developers #26: Managing Container CPU, Memory Requests and Limits), we have successfully configured requests and limits for containers in the pod. However, there is a possibility for developers to forget setting up resources and eventually containers may consume more than fair share of resources in the cluster.

We can solve this problem by setting default requests and limits for container/pod per namespace using K8 LimitRange resource.Another benefit of LimitRange resource is, Pod will not be scheduled on node when developer set the requests and limits of container bigger than LimitRange min and max limits.

LimitRange helps developer to stop creating too tiny or too big container as it validates against LimitRange min and mix limit while creating the pod.

As per YAML,

- type: It specifies whether LimitRange settings are applicable to each container or entire Pod. Acceptable values are Container/Pod

- defaultRequest: These values will be added to a container automatically when container doesn’t have its own CPU request and Memory request.

- default: These values will be added to a container automatically when container doesn’t have its own CPU limit and Memory limit.

- min: It sets up the minimum Requests that a container in a Pod can set. The defaultRequest section cannot be lower than these values. Pod can’t be created when its CPU and Memory requests are less than these values.

- max: It sets up the maximum limits that a container in a Pod can set. The default section cannot be higher than these values. Pod can’t be created when its CPU and Memory limits are higher than these values

- Kubernetes for Developers #26: Managing Container CPU, Memory Requests and Limits

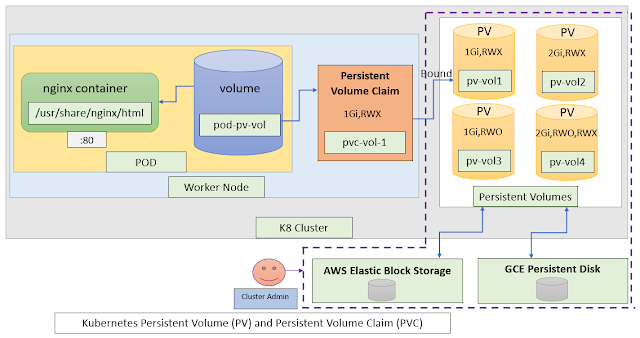

- Kubernetes for Developers #25: PersistentVolume and PersistentVolumeClaim in-detail

- Kubernetes for Developers #24: Kubernetes Volume hostPath in-detail

- Kubernetes for Developers #23: Kubernetes Volume emptyDir in-detail

- Kubernetes for Developers #22: Access to Multiple Clusters or Namespaces using kubectl and kubeconfig

- Kubernetes for Developers #21: Kubernetes Namespace in-detail

- Kubernetes for Developers #20: Create Automated Tasks using Jobs and CronJobs

- Kubernetes for Developers #19: Manage app credentials using Kubernetes Secrets

- Kubernetes for Developers #18: Manage app settings using Kubernetes ConfigMap

- Kubernetes for Developers #17: Expose service using Kubernetes Ingress

- Kubernetes for Developers #16: Kubernetes Service Types - ClusterIP, NodePort, LoadBalancer and ExternalName

- Kubernetes for Developers #15: Kubernetes Service YAML manifest in-detail

- Kubernetes for Developers #14: Kubernetes Deployment YAML manifest in-detail

- Kubernetes for Developers #13: Effective way of using K8 Readiness Probe

- Kubernetes for Developers #12: Effective way of using K8 Liveness Probe

- Kubernetes for Developers #11: Pod Organization using Labels

- Kubernetes for Developers #10: Kubernetes Pod YAML manifest in-detail

- Kubernetes for Developers #9: Kubernetes Pod Lifecycle

- Kubernetes for Developers #8: Kubernetes Object Name, Labels, Selectors and Namespace

- Kubernetes for Developers #7: Imperative vs. Declarative Kubernetes Objects

- Kubernetes for Developers #6: Kubernetes Objects

- Kubernetes for Developers #5: Kubernetes Web UI Dashboard

- Kubernetes for Developers #4: Enable kubectl bash autocompletion

- Kubernetes for Developers #3: kubectl CLI

- Kubernetes for Developers #2: Kubernetes for Local Development

- Kubernetes for Developers #1: Kubernetes Architecture and Features